Sora by OpenAI: Potential and Limitations

Sora promises to revolutionize video creation with AI but has significant limitations in accurately reproducing real places.

OpenAI continues to surprise the world of artificial intelligence with increasingly advanced innovations. Among the most recent is Sora, the AI-powered video generator that is gradually being integrated into the ChatGPT ecosystem. This tool promises to revolutionize video content creation by allowing users to generate videos simply through text descriptions.

Despite its extraordinary potential, the technology still has significant limitations that deserve to be thoroughly explored. My recent experience with this tool has revealed both its incredible potential and its evident shortcomings, especially when it comes to accurately reproducing real places and elements.

How Sora Works and Its Integration with ChatGPT

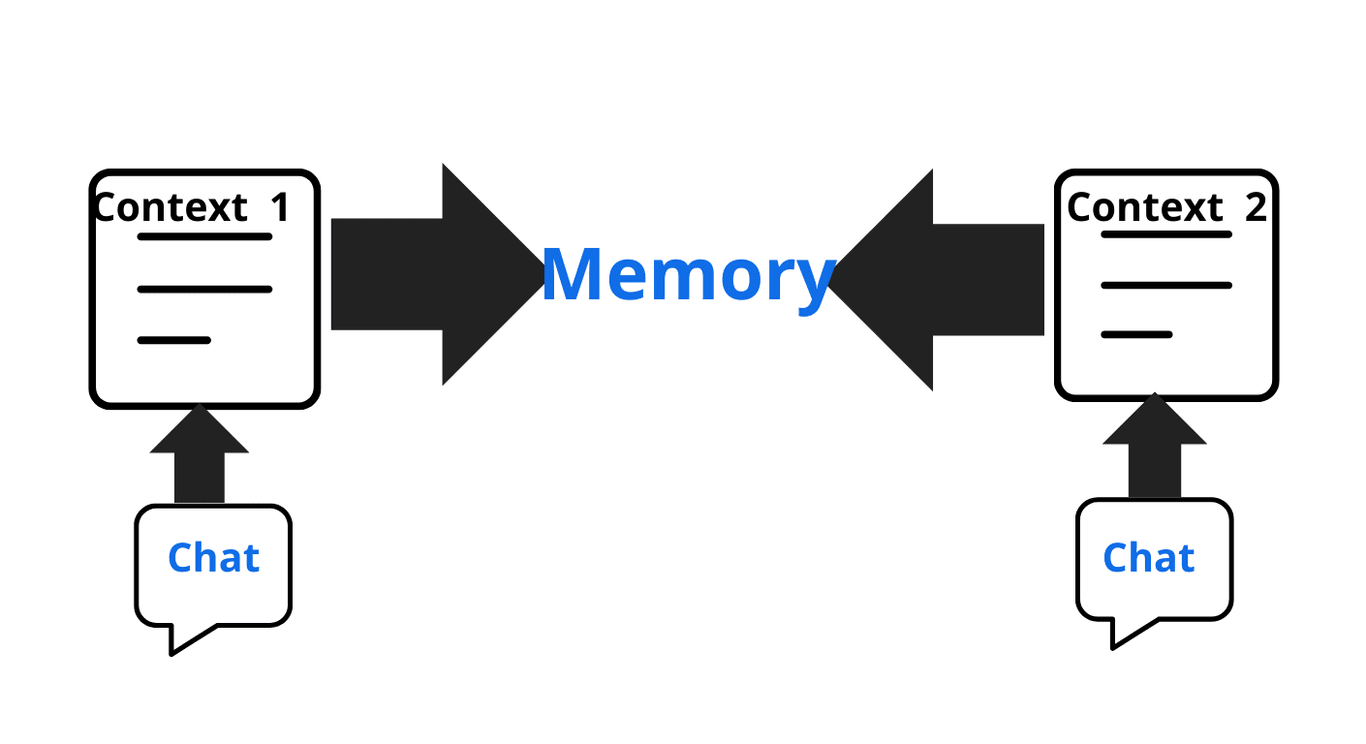

Sora was initially launched as a standalone web app, but OpenAI has confirmed that it is working on integrating it directly into the ChatGPT application, creating a unified experience for users. This integration will allow seamless transitions from text generation to video creation within the same interface. Rohan Sahai, Sora's product lead, has confirmed this direction, although he has not provided a specific timeline for the completion of the integration.

Currently, to use Sora, users must access it through the dedicated platform (sora.com) or directly from ChatGPT by clicking on the Sora button in the upper left corner of the interface. The generation process is conceptually simple: the user describes what they want to see, and the AI transforms this description into a video. However, behind this apparent simplicity lies a complex artificial intelligence architecture based on Generative Pre-training Transformer (GPT) technology, specifically adapted for video content generation.

To create a video, simply enter a text description in the prompt at the bottom of the screen. In addition to text, various settings can be customized: using an image or video as a starting point, selecting a preset style, choosing the aspect ratio (16:9, 1:1, or 9:16), setting the resolution (480p, 720p, or 1080p), defining the video duration (5, 10, 15, or 20 seconds), and selecting how many variations to generate (1, 2, or 4).

Plans, Pricing, and Technical Limitations

One of Sora’s most limiting aspects concerns accessibility and costs. The service is available exclusively to subscribers of ChatGPT’s paid plans, with significant differences between the available options.

The ChatGPT Plus subscription, priced at $20 per month plus taxes, allows users to generate videos with restricted durations: up to 5 seconds at 720p resolution or 10 seconds at 480p, with a cap of 50 priority videos (1,000 credits).

For those needing more flexibility, the ChatGPT Pro subscription comes at a much higher cost of $200 per month plus taxes. This plan enables the creation of videos up to 20 seconds in 1080p resolution, without a watermark, with a limit of 500 priority videos (10,000 credits) and support for 5 simultaneous generations. It is clear that these limitations—especially regarding duration and resolution—make it difficult to use Sora for professional purposes, considering that 4K resolution is not available, and even with the most expensive plan, videos cannot exceed 20 seconds in length.

Each video generation consumes a certain amount of monthly credits, which varies based on the video’s duration and resolution. Users can check their remaining credit balance on the Sora website, in the "My Plan" section.

The Limitations of Sora’s Generative Capabilities

Despite the exciting promises, early reviews highlight that Sora still has significant limitations in its ability to generate realistic and coherent videos based on user instructions. This issue becomes particularly evident when using complex prompts or requesting an accurate depiction of real-world locations or objects.

My personal experience confirms these challenges. I tested Sora by requesting a short video of a monument in my city. Even though I used the meta prompt technique (essentially leveraging ChatGPT to generate an optimized prompt for Sora) and repeated the attempt multiple times, the results were consistently unsatisfactory. Sora systematically introduced fabricated and non-existent elements, in some cases even visibly surreal details, significantly altering the actual appearance of the monument.

This phenomenon could be attributed to several factors. First, AI models like Sora tend to "hallucinate", meaning they generate content that does not correspond to reality, relying on learned patterns rather than accurate information about the requested subject. Second, the model’s ability to understand references to specific and lesser-known locations remains limited, leading it to improvise missing details from its knowledge database.

Strategies to Improve Results with Sora

While acknowledging its current limitations, there are several strategies that can help achieve better results with Sora. The structure of the prompt plays a crucial role: it is advisable to clearly specify the subject, the action or event to be depicted, the setting or environment, and style preferences.

Using adjectives and rich descriptive language can significantly enhance the output, as can incorporating instructions on movement and action, such as specifying camera movements or perspectives (“first-person shot,” “panoramic view”). For complex scenes, an effective strategy is to break the scene into smaller actions, generating separate videos that can later be combined using an external video editor.

An iterative approach is essential: achieving a perfect result on the first attempt is unlikely. It’s important to analyze what works and what doesn’t, then refine the prompt accordingly. Sora’s post-generation tools—such as re-cut, remix, blend, and loop—can be used to fine-tune the result, preserving the best parts while discarding the rest.

Considerations on the Future of Sora

OpenAI is well aware of the potential negative implications of this technology. Even Sam Altman, founder and first CEO of OpenAI, has admitted that he "loses sleep at night" thinking about the possible negative consequences of AI. It’s no coincidence that Sora was not immediately released to the public, not even in a trial version, as OpenAI was still working on how to safeguard it from issues related to the creation of fake videos that could be mistaken for authentic ones.

To address these concerns, OpenAI is collaborating with the C2PA consortium, which includes organizations like the BBC and The New York Times, to develop labels that can identify AI-generated content. This involves embedding additional metadata to highlight the artificial nature of the videos and implementing restrictions on online distribution if shared guidelines are violated.